Report Finds AI Tools Are Not Good at Citing Accurate Sources

Yeah, this is probably not overly surprising, but it still serves as a handy reminder as to the limitations of the current wave of generative AI search tools, which social apps are now pushing you to use at every turn.

According to a new study conducted by the Tow Center for Digital Journalism, most of the major AI search engines fail to provide correct citations of news articles within queries, with the tools often making up reference links, or simply not providing an answer when questioned on a source.

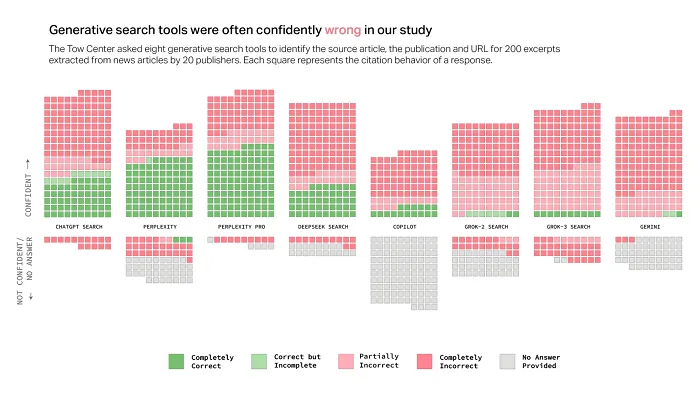

As you can see in this chart, most of the major AI chatbots weren’t particularly good at providing relevant citations, with xAI’s Grok chatbot, which Elon Musk has touted as the “most truthful” AI, being among the most inaccurate or unreliable resources in this respect.

As per the report:

“Overall, the chatbots provided incorrect answers to more than 60% of queries. Across different platforms, the level of inaccuracy varied, with Perplexity answering 37% of the queries incorrectly, while Grok 3 had a much higher error rate, answering 94% of the queries incorrectly.”

On another front, the report found that, in many cases, these tools were often able to provide information from sources that have been locked down to AI scraping:

“On some occasions, the chatbots either incorrectly answered or declined to answer queries from publishers that permitted them to access their content. On the other hand, they sometimes correctly answered queries about publishers whose content they shouldn’t have had access to.”

Which suggests that some AI providers are not respecting the robots.txt commands that block them from accessing copyright protected works.

But the topline concern relates to the reliability of AI tools, which are increasingly being used as search engines by a growing number of web users. Indeed, many youngsters are now growing up with ChatGPT as their research tool of choice, and insights like this show that you simply cannot rely on AI tools to give you accurate information, and educate you on key topics in any reliable way.

Of course, that’s not news, as such. Anybody who’s used an AI chatbot will know that the responses are not always valuable, or usable in any way. But again, the concern is more that we’re promoting these tools as a replacement for actual research, and a shortcut to knowledge, and for younger users in particular, that could lead to a new age of ill-informed, less equipped people, who outsource their own logic to these systems.

Businessman Mark Cuban summed this problem up pretty accurately in a session at SXSW this week:

“AI is never the answer. AI is the tool. Whatever skills you have, you can use AI to amplify them.”

Cuban’s point is that while AI tools can give you an edge, and everyone should be considering how they can use them to enhance their performance, they are not solutions in themselves.

AI can create video for you, but it can’t come up with a story, which is the most compelling element. AI can produce code that’ll help you build an app, but it can’t build the actual app itself.

This is where you need your own critical thinking skills and abilities to expand these elements into something bigger, and while AI outputs will definitely help in this respect, they are not an answer in themselves.

The concern in this particular case is that we’re showing youngsters that AI tools can give them answers, which the research has repeatedly shown it’s not particularly good at.

What we need is for people to understand how these systems can extend their abilities, not replace them, and that to get the most out of these systems, you first need to have key research and analytical skills, as well as expertise in related fields.

Originally published at Social Media Today